Package Exports

- @samchon/openapi

- @samchon/openapi/lib/composers/LlmSchemaComposer

- @samchon/openapi/lib/composers/LlmSchemaComposer.js

- @samchon/openapi/lib/composers/LlmSchemaComposer.mjs

- @samchon/openapi/lib/converters/OpenApiV3Downgrader

- @samchon/openapi/lib/converters/OpenApiV3Downgrader.js

- @samchon/openapi/lib/converters/OpenApiV3Downgrader.mjs

- @samchon/openapi/lib/converters/OpenApiV3_1Emender

- @samchon/openapi/lib/converters/OpenApiV3_1Emender.js

- @samchon/openapi/lib/http/HttpMigrateRouteFetcher

- @samchon/openapi/lib/http/HttpMigrateRouteFetcher.js

- @samchon/openapi/lib/index.js

- @samchon/openapi/lib/index.mjs

- @samchon/openapi/lib/structures/ILlmFunction

- @samchon/openapi/lib/structures/ILlmFunction.js

- @samchon/openapi/lib/utils/OpenApiExclusiveEmender

- @samchon/openapi/lib/utils/OpenApiExclusiveEmender.js

- @samchon/openapi/lib/utils/OpenApiExclusiveEmender.mjs

- @samchon/openapi/lib/utils/OpenApiValidator

- @samchon/openapi/lib/utils/OpenApiValidator.js

This package does not declare an exports field, so the exports above have been automatically detected and optimized by JSPM instead. If any package subpath is missing, it is recommended to post an issue to the original package (@samchon/openapi) to support the "exports" field. If that is not possible, create a JSPM override to customize the exports field for this package.

Readme

@samchon/openapi

flowchart

subgraph "OpenAPI Specification"

v20("Swagger v2.0") --upgrades--> emended[["OpenAPI v3.1 (emended)"]]

v30("OpenAPI v3.0") --upgrades--> emended

v31("OpenAPI v3.1") --emends--> emended

end

subgraph "OpenAPI Generator"

emended --normalizes--> migration[["Migration Schema"]]

migration --"Artificial Intelligence"--> lfc{{"LLM Function Calling"}}

lfc --"OpenAI"--> chatgpt("ChatGPT")

lfc --"Google"--> gemini("Gemini")

lfc --"Anthropic"--> claude("Claude")

lfc --"<i>Google</i>" --> legacy_gemini("<i> (legacy) Gemini</i>")

legacy_gemini --"3.0" --> custom(["Custom JSON Schema"])

chatgpt --"3.1"--> custom

gemini --"3.1"--> standard(["Standard JSON Schema"])

claude --"3.1"--> standard

endTransform OpenAPI documents into type-safe LLM function calling applications.

@samchon/openapi converts any version of OpenAPI/Swagger documents into LLM function calling schemas for OpenAI GPT, Claude, and Gemini. It supports every OpenAPI version (Swagger 2.0, OpenAPI 3.0, and OpenAPI 3.1) with full TypeScript type definitions. The library also works with MCP (Model Context Protocol) servers, enabling seamless AI agent development.

Key Features:

- Universal OpenAPI Support: Works with Swagger 2.0, OpenAPI 3.0, and OpenAPI 3.1

- LLM Function Calling: Auto-generates function schemas for OpenAI, Claude, and Gemini

- Type-Safe Validation: Built-in validation with detailed error feedback for LLM responses

- MCP Integration: Compose function calling schemas from MCP servers

- Emended Specification: Standardized OpenAPI v3.1 format that removes ambiguities

Live Demo:

https://github.com/user-attachments/assets/e1faf30b-c703-4451-b68b-2e7a8170bce5

Watch how

@samchon/openapipowers an AI shopping chatbot with@agentica

Quick Start

npm install @samchon/openapiTransform your OpenAPI document into an LLM function calling application in just a few lines:

import { HttpLlm, OpenApi } from "@samchon/openapi";

// Load and convert your OpenAPI document

const document: OpenApi.IDocument = OpenApi.convert(swagger);

// Generate LLM function calling schemas

const application: IHttpLlmApplication<"chatgpt"> = HttpLlm.application({

model: "chatgpt", // "chatgpt" | "claude" | "gemini"

document,

});

// Find a function by path and method

const func: IHttpLlmFunction<"chatgpt"> | undefined = application.functions.find(

(f) => f.path === "/bbs/articles" && f.method === "post"

);

// Execute the function with LLM-composed arguments

const result: unknown = await HttpLlm.execute({

connection: { host: "http://localhost:3000" },

application,

function: func,

arguments: llmGeneratedArgs, // from OpenAI/Claude/Gemini

});That's it! Your HTTP backend is now callable by AI.

OpenAPI Definitions

@samchon/openapi provides complete TypeScript definitions for all OpenAPI versions and introduces an "emended" OpenAPI v3.1 specification that serves as a universal intermediate format.

flowchart

v20(Swagger v2.0) --upgrades--> emended[["<b><u>OpenAPI v3.1 (emended)</u></b>"]]

v30(OpenAPI v3.0) --upgrades--> emended

v31(OpenAPI v3.1) --emends--> emended

emended --downgrades--> v20d(Swagger v2.0)

emended --downgrades--> v30d(Swagger v3.0)Supported Specifications:

- Swagger v2.0

- OpenAPI v3.0

- OpenAPI v3.1

- OpenAPI v3.1 (emended) - Standardized format

What is "Emended" OpenAPI?

The emended specification removes ambiguities and duplications from OpenAPI v3.1, creating a cleaner, more consistent format. All conversions flow through this intermediate format.

Key Improvements:

- Operations: Merges parameters from path and operation levels, resolves all references

- JSON Schema: Eliminates mixed types, unifies nullable handling, standardizes array/tuple representations

- Schema Composition: Consolidates

anyOf,oneOf,allOfpatterns into simpler structures

Converting Between Versions

import { OpenApi } from "@samchon/openapi";

// Convert any version to emended format

const emended: OpenApi.IDocument = OpenApi.convert(swagger); // Swagger 2.0/3.0/3.1

// Downgrade to older versions if needed

const v30: OpenApiV3.IDocument = OpenApi.downgrade(emended, "3.0");

const v20: SwaggerV2.IDocument = OpenApi.downgrade(emended, "2.0");Validating OpenAPI Documents

Use typia for runtime validation with detailed type checking - far more accurate than other validators:

import { OpenApi, OpenApiV3, OpenApiV3_1, SwaggerV2 } from "@samchon/openapi";

import typia from "typia";

const document: any = await fetch("swagger.json").then(r => r.json());

// Validate with detailed error messages

const result: typia.IValidation<SwaggerV2.IDocument | OpenApiV3.IDocument | OpenApiV3_1.IDocument> =

typia.validate<SwaggerV2.IDocument | OpenApiV3.IDocument | OpenApiV3_1.IDocument>(document);

if (result.success) {

const emended: OpenApi.IDocument = OpenApi.convert(result.data);

} else {

console.error(result.errors); // Detailed validation errors

}Try it in the playground: Type assertion | Detailed validation

LLM Function Calling

flowchart

subgraph "OpenAPI Specification"

v20("Swagger v2.0") --upgrades--> emended[["OpenAPI v3.1 (emended)"]]

v30("OpenAPI v3.0") --upgrades--> emended

v31("OpenAPI v3.1") --emends--> emended

end

subgraph "OpenAPI Generator"

emended --normalizes--> migration[["Migration Schema"]]

migration --"Artificial Intelligence"--> lfc{{"LLM Function Calling"}}

lfc --"OpenAI"--> chatgpt("ChatGPT")

lfc --"Google"--> gemini("Gemini")

lfc --"Anthropic"--> claude("Claude")

lfc --"<i>Google</i>" --> legacy_gemini("<i> (legacy) Gemini</i>")

legacy_gemini --"3.0" --> custom(["Custom JSON Schema"])

chatgpt --"3.1"--> custom

gemini --"3.1"--> standard(["Standard JSON Schema"])

claude --"3.1"--> standard

endTurn your HTTP backend into an AI-callable service. @samchon/openapi converts your OpenAPI document into function schemas that OpenAI, Claude, and Gemini can understand and call.

Supported AI Models

IChatGptSchema - For OpenAI GPT

- Fully compatible with OpenAI's strict mode

- strict mode is not recommended

- validation feedback strategy is much powerful

- Uses JSDoc tags in

descriptionto bypass OpenAI's schema limitations

IClaudeSchema - For Anthropic Claude ⭐ Recommended

- Follows JSON Schema standard most closely

- No artificial restrictions - cleanest type definitions

- Ideal default choice when you're unsure which model to use

- working on every models unless OpenAI's strict mode or legacy Gemini

IGeminiSchema - For Google Gemini

- Supports nearly all JSON Schema specifications (as of Nov 2025)

- Previous versions had severe restrictions, but these are now removed

[!NOTE]

You can also compose

ILlmApplicationfrom a TypeScript class usingtypia.https://typia.io/docs/llm/application

import { ILlmApplication } from "@samchon/openapi"; import typia from "typia"; const app: ILlmApplication<"chatgpt"> = typia.llm.application<YourClassType, "chatgpt">();

Complete Example

Here's a full example showing how OpenAI GPT selects a function, fills arguments, and you execute it:

Resources:

import { HttpLlm, OpenApi, IHttpLlmApplication, IHttpLlmFunction } from "@samchon/openapi";

import OpenAI from "openai";

// 1. Convert OpenAPI to LLM function calling application

const document: OpenApi.IDocument = OpenApi.convert(swagger);

const application: IHttpLlmApplication<"chatgpt"> =

HttpLlm.application({

model: "chatgpt",

document,

});

// 2. Find the function by path and method

const func: IHttpLlmFunction<"chatgpt"> | undefined = application.functions.find(

(f) => f.path === "/shoppings/sellers/sale" && f.method === "post"

);

if (!func) throw new Error("Function not found");

// 3. Let OpenAI GPT call the function

const client: OpenAI = new OpenAI({ apiKey: process.env.OPENAI_API_KEY });

const completion: OpenAI.ChatCompletion = await client.chat.completions.create({

model: "gpt-4o",

messages: [

{ role: "system", content: "You are a helpful shopping assistant." },

{ role: "user", content: "I want to sell Microsoft Surface Pro 9..." }

],

tools: [{

type: "function",

function: {

name: func.name,

description: func.description,

parameters: func.parameters,

}

}],

});

// 4. Execute the function call on your actual server

const toolCall: OpenAI.ChatCompletionMessageToolCall =

completion.choices[0].message.tool_calls![0];

const result: unknown = await HttpLlm.execute({

connection: { host: "http://localhost:37001" },

application,

function: func,

input: JSON.parse(toolCall.function.arguments),

});Validation Feedback - Fixing LLM Mistakes

The Problem: LLMs make type errors. A lot.

Even when your schema says Array<string>, GPT might return just "string". In real-world testing with OpenAI GPT-4o-mini on a shopping service:

- 1st attempt: 70% success rate ❌

- 2nd attempt (with validation feedback): 98% success rate ✅

- 3rd attempt: Never failed ✅

The Solution: Validate LLM output and send errors back for correction.

import { HttpLlm, OpenApi, IHttpLlmApplication, IHttpLlmFunction, IValidation } from "@samchon/openapi";

// Setup application

const document: OpenApi.IDocument = OpenApi.convert(swagger);

const application: IHttpLlmApplication<"chatgpt"> = HttpLlm.application({

model: "chatgpt",

document,

});

const func: IHttpLlmFunction<"chatgpt"> = application.functions[0];

// Validate LLM-generated arguments

const result: IValidation<unknown> = func.validate(llmArguments);

if (result.success === false) {

// Send detailed error feedback to LLM

return await retryWithFeedback({

message: "Type errors detected. Please correct the arguments.",

errors: result.errors, // Detailed error information

});

} else {

// Execute the validated function

const output: unknown = await HttpLlm.execute({

connection: { host: "http://localhost:3000" },

application,

function: func,

input: result.data,

});

return output;

}The validation uses typia.validate<T>(), which provides the most accurate validation and extremely detailed error messages compared to other validators:

| Components | typia |

TypeBox |

ajv |

io-ts |

zod |

C.V. |

|---|---|---|---|---|---|---|

| Easy to use | ✅ | ❌ | ❌ | ❌ | ❌ | ❌ |

| Object (simple) | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ |

| Object (hierarchical) | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ |

| Object (recursive) | ✔ | ❌ | ✔ | ✔ | ✔ | ✔ |

| Object (union, implicit) | ✅ | ❌ | ❌ | ❌ | ❌ | ❌ |

| Object (union, explicit) | ✔ | ✔ | ✔ | ✔ | ✔ | ❌ |

| Object (additional tags) | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ |

| Object (template literal types) | ✔ | ✔ | ✔ | ❌ | ❌ | ❌ |

| Object (dynamic properties) | ✔ | ✔ | ✔ | ❌ | ❌ | ❌ |

| Array (rest tuple) | ✅ | ❌ | ❌ | ❌ | ❌ | ❌ |

| Array (hierarchical) | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ |

| Array (recursive) | ✔ | ✔ | ✔ | ✔ | ✔ | ❌ |

| Array (recursive, union) | ✔ | ✔ | ❌ | ✔ | ✔ | ❌ |

| Array (R+U, implicit) | ✅ | ❌ | ❌ | ❌ | ❌ | ❌ |

| Array (repeated) | ✅ | ❌ | ❌ | ❌ | ❌ | ❌ |

| Array (repeated, union) | ✅ | ❌ | ❌ | ❌ | ❌ | ❌ |

| Ultimate Union Type | ✅ | ❌ | ❌ | ❌ | ❌ | ❌ |

C.V.meansclass-validator

Model Context Protocol

flowchart

subgraph "JSON Schema Specification"

schemav4("JSON Schema v4 ~ v7") --upgrades--> emended[["OpenAPI v3.1 (emended)"]]

schema2910("JSON Schema 2019-03") --upgrades--> emended

schema2020("JSON Schema 2020-12") --emends--> emended

end

subgraph "OpenAPI Generator"

emended --normalizes--> migration[["Migration Schema"]]

migration --"Artificial Intelligence"--> lfc{{"LLM Function Calling"}}

lfc --"OpenAI"--> chatgpt("ChatGPT")

lfc --"Google"--> gemini("Gemini")

lfc --"Anthropic"--> claude("Claude")

lfc --"<i>Google</i>" --> legacy_gemini("<i> (legacy) Gemini</i>")

legacy_gemini --"3.0" --> custom(["Custom JSON Schema"])

chatgpt --"3.1"--> custom

gemini --"3.1"--> standard(["Standard JSON Schema"])

claude --"3.1"--> standard

end@samchon/openapi provides better MCP function calling than using the mcp_servers property directly.

While MCP (Model Context Protocol) can execute server functions directly through the mcp_servers property, @samchon/openapi offers significant advantages through model specification support, validation feedback, and selector agent filtering for context optimization.

For example, the GitHub MCP server has 30 functions. Loading all of them via mcp_servers creates huge context that often causes AI agents to crash with hallucinations. Function calling with proper filtering avoids this problem.

https://github.com/user-attachments/assets/72390cb4-d9b1-4d31-a6dd-d866da5a433b

GitHub MCP server via

mcp_serversoften crashes.However, function calling to GitHub MCP with

@agenticaworks properly.

- Function calling demo: https://www.youtube.com/watch?v=rLlHkc24cJs

Creating MCP applications:

Use McpLlm.application() to create function calling schemas from MCP tools. The returned IMcpLlmApplication includes the IMcpLlmFunction.validate() function for validation feedback.

MCP supports all JSON schema specifications without restrictions:

- JSON Schema v4, v5, v6, v7

- JSON Schema 2019-03

- JSON Schema 2020-12

import {

IMcpLlmApplication,

IMcpLlmFunction,

IValidation,

McpLlm,

} from "@samchon/openapi";

const application: IMcpLlmApplication<"chatgpt"> = McpLlm.application({

model: "chatgpt",

tools: [...],

});

const func: IMcpLlmFunction<"chatgpt"> = application.functions.find(

(f) => f.name === "create",

)!;

const result: IValidation<unknown> = func.validate({

title: "Hello World",

body: "Nice to meet you AI developers",

thumbnail: "https://wrtnlabs.io/agentica/thumbnail.jpg",

});

console.log(result);Utilization Cases

Agentica

https://github.com/wrtnlabs/agentica

Agentic AI framework that converts OpenAPI documents into LLM function calling schemas for ChatGPT, Claude, and Gemini. Uses @samchon/openapi to transform backend REST APIs into callable functions with automatic parameter validation and type-safe remote execution.

import { Agentica, assertHttpController } from "@agentica/core";

import OpenAI from "openai";

import typia from "typia";

import { MobileFileSystem } from "./services/MobileFileSystem";

const agent = new Agentica({

model: "chatgpt",

vendor: {

api: new OpenAI({ apiKey: "********" }),

model: "gpt-4.1-mini",

},

controllers: [

// functions from TypeScript class

typia.llm.controller<MobileFileSystem, "chatgpt">(

"filesystem",

MobileFileSystem(),

),

// functions from Swagger/OpenAPI

// Uses @samchon/openapi under the hood:

// 1. OpenApi.convert() to emended format

// 2. HttpLlm.application() to create IHttpLlmApplication<"chatgpt">

// 3. IChatGptSchema composed for each API operation

assertHttpController({

name: "shopping",

model: "chatgpt",

document: await fetch(

"https://shopping-be.wrtn.ai/editor/swagger.json",

).then(r => r.json()),

connection: {

host: "https://shopping-be.wrtn.ai",

headers: { Authorization: "Bearer ********" },

},

}),

],

});

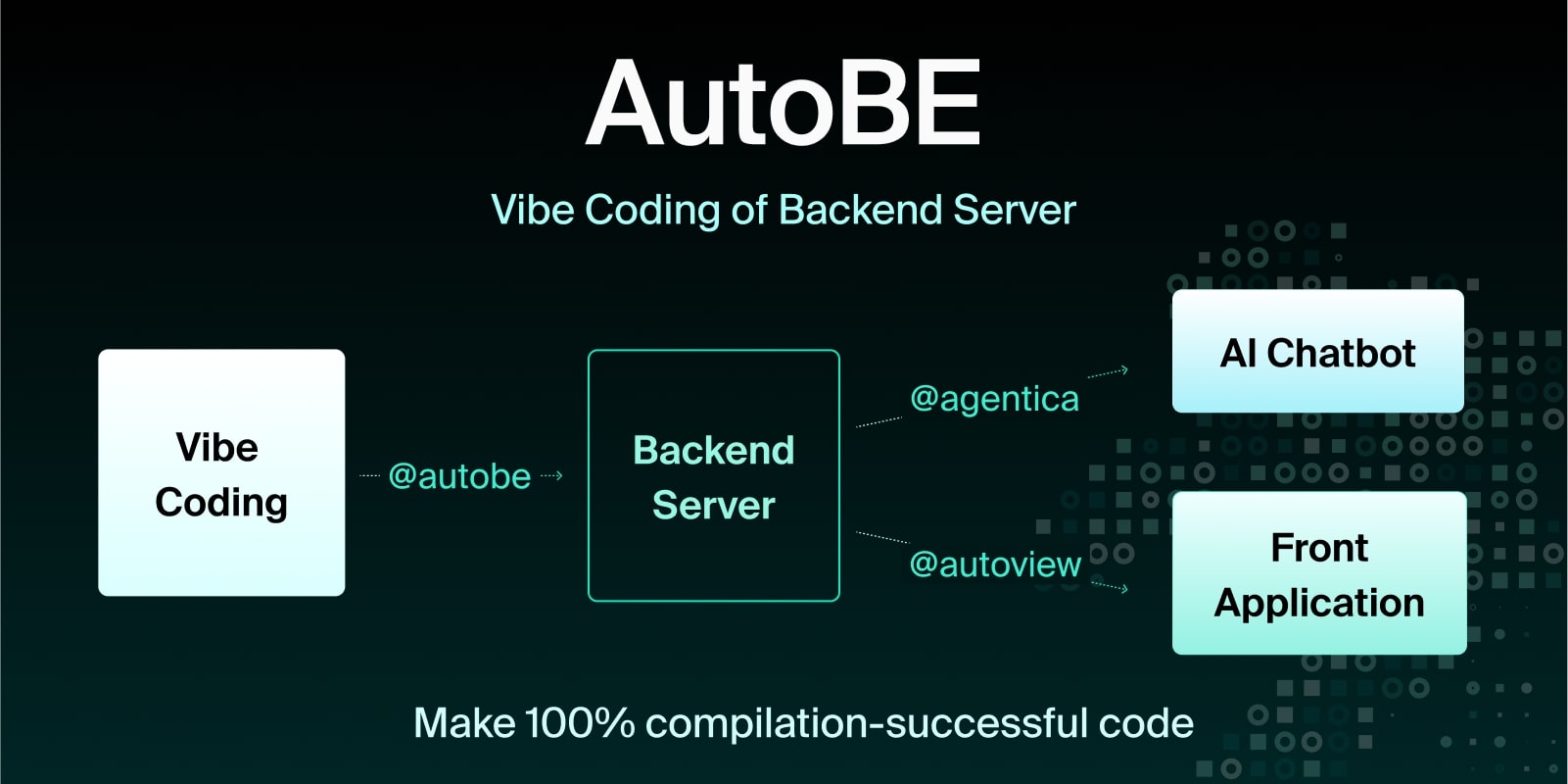

await agent.conversate("I wanna buy MacBook Pro");AutoBE

AI backend code generator achieving 100% compilation success by using function calling to construct compiler AST instead of generating code text. For API specification design, uses @samchon/openapi types - AI calls compiler functions to build OpenAPI document structures that define REST endpoints and request/response schemas.

import { MicroAgentica } from "@agentica/core";

import { OpenApi } from "@samchon/openapi";

const agent = new MicroAgentica({

model: "chatgpt",

vendor: {

api: new OpenAI({ apiKey: "********" }),

model: "gpt-4.1-mini",

},

controllers: [

// Compiler functions that receive/produce OpenApi.IDocument

typia.llm.controller<OpenApiWriteApplication>(

"api",

new OpenApiWriteApplication(),

),

],

});

await agent.conversate("Design API specification, and generate backend app.");

class OpenApiWriteApplication {

// LLM calls this function with OpenApi.IDocument structure

// The type guarantees all operations have valid IJsonSchema definitions

public async write(document: OpenApi.IDocument): Promise<void> {

// document.paths contains OpenApi.IOperation[]

// Each operation.parameters, requestBody, responses use OpenApi.IJsonSchema

// Compiler validates schema structure before code generation

...

}

}