Package Exports

This package does not declare an exports field, so the exports above have been automatically detected and optimized by JSPM instead. If any package subpath is missing, it is recommended to post an issue to the original package (aicommit2) to support the "exports" field. If that is not possible, create a JSPM override to customize the exports field for this package.

Readme

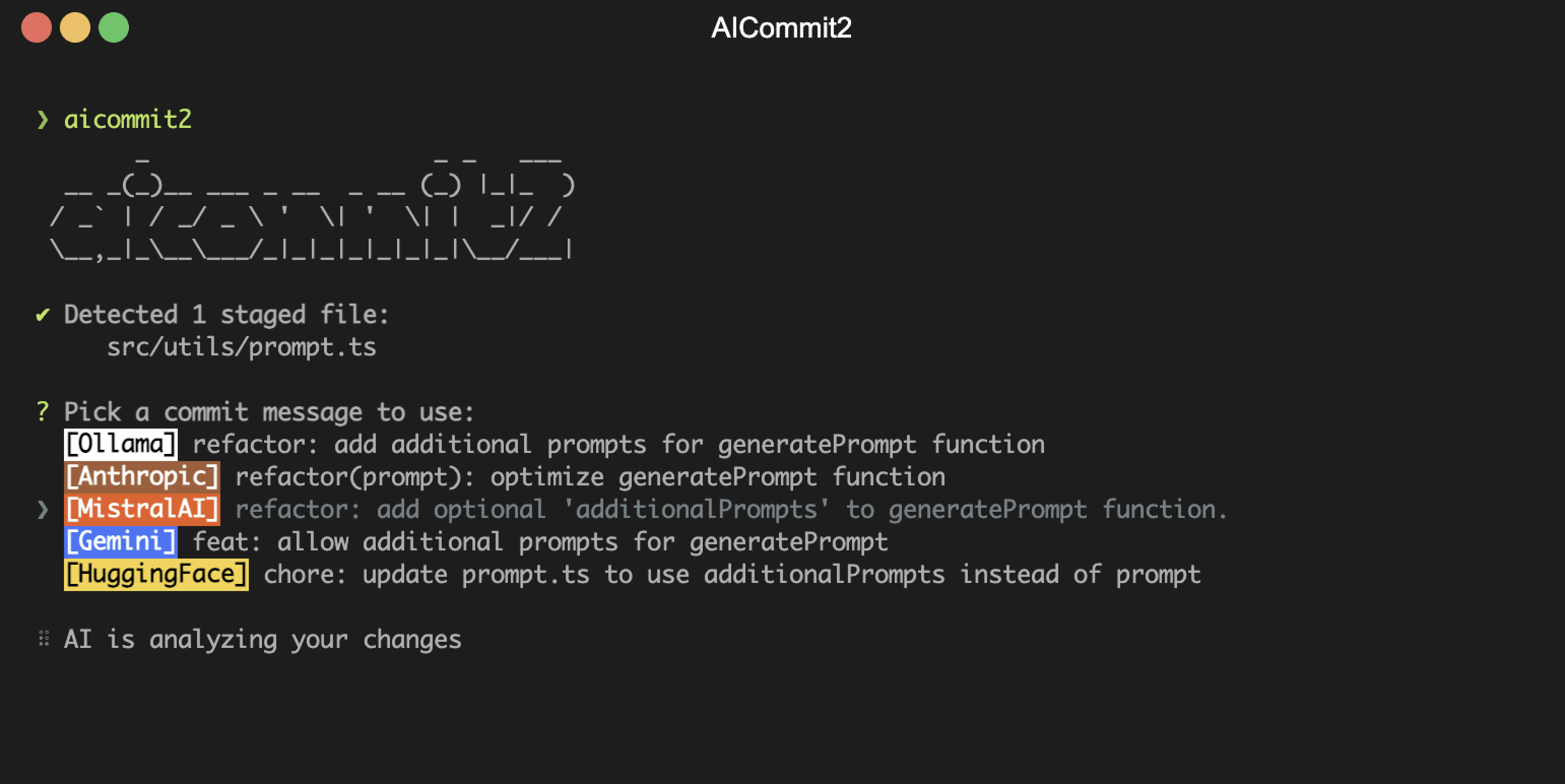

AICommit2

A Reactive CLI that generates git commit messages with Ollama, ChatGPT, Gemini, Claude, Mistral and other AI

🚀 Quick Start

# Install globally

npm install -g aicommit2

# Set up at least one AI provider

aicommit2 config set OPENAI.key=<your-key>

# Use in your git repository

git add .

aicommit2📖 Introduction

aicommit2 is a reactive CLI tool that automatically generates Git commit messages using various AI models. It supports simultaneous requests to multiple AI providers, allowing users to select the most suitable commit message. The core functionalities and architecture of this project are inspired by AICommits.

✨ Key Features

- Multi-AI Support: Integrates with OpenAI, Anthropic Claude, Google Gemini, Mistral AI, Cohere, Groq, Ollama and more.

- OpenAI API Compatibility: Support for any service that implements the OpenAI API specification.

- Reactive CLI: Enables simultaneous requests to multiple AIs and selection of the best commit message.

- Git Hook Integration: Can be used as a prepare-commit-msg hook.

- Custom Prompt: Supports user-defined system prompt templates.

🤖 Supported Providers

Cloud AI Services

- OpenAI

- Anthropic Claude

- Gemini

- Mistral & Codestral

- Cohere

- Groq

- Perplexity

- DeepSeek

- OpenAI API Compatibility

Local AI Services

Setup

⚠️ The minimum supported version of Node.js is the v18. Check your Node.js version with

node --version.

- Install aicommit2:

npm install -g aicommit2- Set up API keys (at least ONE key must be set):

aicommit2 config set OPENAI.key=<your key>

aicommit2 config set ANTHROPIC.key=<your key>

# ... (similar commands for other providers)- Run aicommit2 with your staged files in git repository:

git add <files...>

aicommit2👉 Tip: Use the

aic2alias ifaicommit2is too long for you.

Alternative Installation Methods

Nix Installation

If you use the Nix package manager, aicommit2 can be installed directly using the provided flake:

# Install temporarily in your current shell

nix run github:tak-bro/aicommit2

# Install permanently to your profile

nix profile install github:tak-bro/aicommit2

# Use the shorter alias

nix run github:tak-bro/aic2 -- --helpUsing in a Flake-based Project

Add aicommit2 to your flake inputs:

{

# flake.nix configuration file

inputs = {

nixpkgs.url = "github:NixOS/nixpkgs/nixos-unstable";

aicommit2.url = "github:tak-bro/aicommit2";

};

# Rest of your flake.nix file

}

# Somewhere where you define your packages

{pkgs, inputs, ...}:{

environment.systemPackages = [inputs.aicommit2.packages.x86_64-linux.default];

# Or home packages

home.packages = [inputs.aicommit2.packages.x86_64-linux.default];

}Development Environment

To enter a development shell with all dependencies:

nix develop github:tak-bro/aicommit2After setting up with Nix, you'll still need to configure API keys as described in the Setup section.

From Source

git clone https://github.com/tak-bro/aicommit2.git

cd aicommit2

npm run build

npm install -g .Via VSCode Devcontainer

Add feature to

your devcontainer.json file:

"features": {

"ghcr.io/kvokka/features/aicommit2:1": {}

}How it works

This CLI tool runs git diff to grab all your latest code changes, sends them to configured AI, then returns the AI generated commit message.

If the diff becomes too large, AI will not function properly. If you encounter an error saying the message is too long or it's not a valid commit message, try reducing the commit unit.

Usage

CLI mode

You can call aicommit2 directly to generate a commit message for your staged changes:

git add <files...>

aicommit2aicommit2 passes down unknown flags to git commit, so you can pass in commit flags.

For example, you can stage all changes in tracked files with as you commit:

aicommit2 --all # or -aCLI Options

--localeor-l: Locale to use for the generated commit messages (default: en)--allor-a: Automatically stage changes in tracked files for the commit (default: false)--typeor-t: Git commit message format (default: conventional). It supportsconventionalandgitmoji--confirmor-y: Skip confirmation when committing after message generation (default: false)--clipboardor-c: Copy the selected message to the clipboard (default: false).- If you give this option, aicommit2 will not commit.

--generateor-g: Number of messages to generate (default: 1)- Warning: This uses more tokens, meaning it costs more.

--excludeor-x: Files to exclude from AI analysis--hook-mode: Run as a Git hook, typically used with prepare-commit-msg hook (default: false)- This mode is automatically enabled when running through the Git hook system

- See Git hook section for more details

--pre-commit: Run in pre-commit framework mode (default: false)- This option is specifically for use with the pre-commit framework

- See Integration with pre-commit framework section for setup instructions

Example:

aicommit2 --locale "jp" --all --type "conventional" --generate 3 --clipboard --exclude "*.json" --exclude "*.ts"Git hook

You can also integrate aicommit2 with Git via the prepare-commit-msg hook. This lets you use Git like you normally would, and edit the commit message before committing.

Automatic Installation

In the Git repository you want to install the hook in:

aicommit2 hook installManual Installation

if you prefer to set up the hook manually, create or edit the .git/hooks/prepare-commit-msg file:

#!/bin/sh

# your-other-hook "$@"

aicommit2 --hook-mode "$@"Make the hook executable:

chmod +x .git/hooks/prepare-commit-msgIntegration with pre-commit Framework

If you're using the pre-commit framework, you can add aicommit2 to your .pre-commit-config.yaml:

repos:

- repo: local

hooks:

- id: aicommit2

name: AI Commit Message Generator

entry: aicommit2 --pre-commit

language: node

stages: [prepare-commit-msg]

always_run: trueMake sure you have:

- Installed pre-commit:

brew install pre-commit - Installed aicommit2 globally:

npm install -g aicommit2 - Run

pre-commit install --hook-type prepare-commit-msgto set up the hook

Note : The

--pre-commitflag is specifically designed for use with the pre-commit framework and ensures proper integration with other pre-commit hooks.

Uninstall

In the Git repository you want to uninstall the hook from:

aicommit2 hook uninstallOr manually delete the .git/hooks/prepare-commit-msg file.

Configuration

aicommit2 supports configuration via command-line arguments, environment variables, and a configuration file. Settings are resolved in the following order of precedence:

- Command-line arguments

- Environment variables

- Configuration file

- Default values

Configuration File Location

aicommit2 follows the XDG Base Directory Specification for its configuration file. The configuration file is named config.ini and is in INI format. It is resolved in the following order of precedence:

AICOMMIT_CONFIG_PATHenvironment variable: If this environment variable is set, its value is used as the direct path to the configuration file.$XDG_CONFIG_HOME/aicommit2/config.ini: This is the primary XDG-compliant location. If$XDG_CONFIG_HOMEis not set, it defaults to~/.config/aicommit2/config.ini.~/.aicommit2: This is a legacy location maintained for backward compatibility.

The first existing file found in this order will be used. If no configuration file is found, aicommit2 will default to creating a new config.ini file in the $XDG_CONFIG_HOME/aicommit2/ directory.

You can find the path of the currently loaded configuration file using the config path command:

aicommit2 config pathReading and Setting Configuration

- READ:

aicommit2 config get [<key> [<key> ...]] - SET:

aicommit2 config set <key>=<value> - DELETE:

aicommit2 config del <config-name>

Example:

# Get all configurations

aicommit2 config get

# Get specific configuration

aicommit2 config get OPENAI

aicommit2 config get GEMINI.key

# Set configurations

aicommit2 config set OPENAI.generate=3 GEMINI.temperature=0.5

# Delete a configuration setting or section

aicommit2 config del OPENAI.key

aicommit2 config del GEMINI

aicommit2 config del timeoutEnvironment Variables

You can configure API keys using environment variables. This is particularly useful for CI/CD environments or when you don't want to store keys in the configuration file.

# OpenAI

OPENAI_API_KEY="your-openai-key"

# Anthropic

ANTHROPIC_API_KEY="your-anthropic-key"

# Google

GEMINI_API_KEY="your-gemini-key"

# Mistral AI

MISTRAL_API_KEY="your-mistral-key"

CODESTRAL_API_KEY="your-codestral-key"

# Other Providers

COHERE_API_KEY="your-cohere-key"

GROQ_API_KEY="your-groq-key"

PERPLEXITY_API_KEY="your-perplexity-key"

DEEPSEEK_API_KEY="your-deepseek-key"Note: You can customize the environment variable name used for the API key with the

envKeyconfiguration property for each service.

Usage Example:

OPENAI_API_KEY="your-openai-key" ANTHROPIC_API_KEY="your-anthropic-key" aicommit2Note: Environment variables take precedence over configuration file settings.

How to Configure in detail

aicommit2 offers flexible configuration options for all AI services, including support for specifying multiple models. You can configure settings via command-line arguments, environment variables, or a configuration file.

Command-line arguments: Use the format

--[Model].[Key]=value. To specify multiple models, use the--[Model].model=model1,model2format.aicommit2 --OPENAI.locale="jp" --GEMINI.temperature="0.5" --OPENAI.model="gpt-4o,gpt-3.5-turbo"

Configuration file: Refer to Configuration File Location or use the

setcommand. For array-like values likemodel, you can use either themodel=model1,model2comma-separated syntax or themodel[]=syntax for multiple entries. This applies to all AI services.# General Settings logging=true generate=2 temperature=1.0 # Model-Specific Settings [OPENAI] key="<your-api-key>" temperature=0.8 generate=1 model="gpt-4o,gpt-3.5-turbo" systemPromptPath="<your-prompt-path>" [GEMINI] key="<your-api-key>" generate=5 includeBody=true model="gemini-pro,gemini-flash" [OLLAMA] temperature=0.7 model[]=llama3.2 model[]=codestral

The priority of settings is: Command-line Arguments > Environment Variables > Model-Specific Settings > General Settings > Default Values.

General Settings

The following settings can be applied to most models, but support may vary. Please check the documentation for each specific model to confirm which settings are supported.

| Setting | Description | Default |

|---|---|---|

envKey |

Custom environment variable name for the API key | - |

systemPrompt |

System Prompt text | - |

systemPromptPath |

Path to system prompt file | - |

exclude |

Files to exclude from AI analysis | - |

type |

Type of commit message to generate | conventional |

locale |

Locale for the generated commit messages | en |

generate |

Number of commit messages to generate | 1 |

logging |

Enable logging | true |

includeBody |

Whether the commit message includes body | false |

maxLength |

Maximum character length of the Subject of generated commit message | 50 |

timeout |

Request timeout (milliseconds) | 10000 |

temperature |

Model's creativity (0.0 - 2.0) | 0.7 |

maxTokens |

Maximum number of tokens to generate | 1024 |

topP |

Nucleus sampling | 0.9 |

codeReview |

Whether to include an automated code review in the process | false |

codeReviewPromptPath |

Path to code review prompt file | - |

disabled |

Whether a specific model is enabled or disabled | false |

👉 Tip: To set the General Settings for each model, use the following command.

aicommit2 config set OPENAI.locale="jp" aicommit2 config set CODESTRAL.type="gitmoji" aicommit2 config set GEMINI.includeBody=true

envKey

- Allows users to specify a custom environment variable name for their API key.

- If

envKeyis not explicitly set, the system defaults to using an environment variable named after the service, followed by_API_KEY(e.g.,OPENAI_API_KEYfor OpenAI,GEMINI_API_KEYfor Gemini). - This setting provides flexibility for managing API keys, especially when multiple services are used or when specific naming conventions are required.

aicommit2 config set OPENAI.envKey="MY_CUSTOM_OPENAI_KEY"

envKeyis used to retrieve the API key from your system's environment variables. Ensure the specified environment variable is set with your API key.

systemPrompt

- Allow users to specify a custom system prompt

aicommit2 config set systemPrompt="Generate git commit message."

systemPrompttakes precedence oversystemPromptPathand does not apply at the same time.

systemPromptPath

- Allow users to specify a custom file path for their own system prompt template

- Please see Custom Prompt Template

- Note: Paths can be absolute or relative to the configuration file location.

aicommit2 config set systemPromptPath="/path/to/user/prompt.txt"exclude

- Files to exclude from AI analysis

- It is applied with the

--excludeoption of the CLI option. All files excluded through--excludein CLI andexcludegeneral setting.

aicommit2 config set exclude="*.ts"

aicommit2 config set exclude="*.ts,*.json"NOTE:

excludeoption does not support per model. It is only supported by General Settings.

type

Default: conventional

Supported: conventional, gitmoji

The type of commit message to generate. Set this to "conventional" to generate commit messages that follow the Conventional Commits specification:

aicommit2 config set type="conventional"locale

Default: en

The locale to use for the generated commit messages. Consult the list of codes in: https://wikipedia.org/wiki/List_of_ISO_639_language_codes.

aicommit2 config set locale="jp"generate

Default: 1

The number of commit messages to generate to pick from.

Note, this will use more tokens as it generates more results.

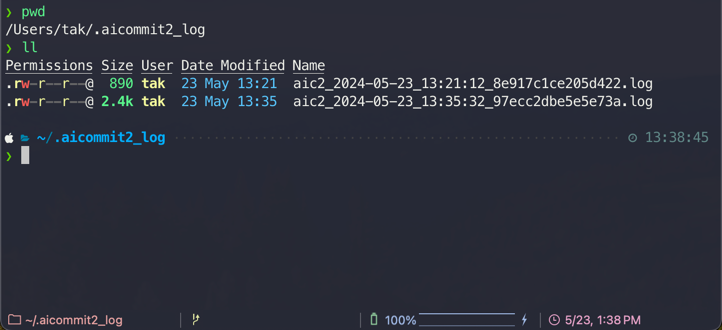

aicommit2 config set generate=2logging

Default: true

This boolean option controls whether the application generates log files. When enabled, both the general application logs and the AI request/response logs are written to their respective paths. For a detailed explanation of all logging settings, including how to enable/disable logging and manage log files, please refer to the main Logging section.

- Log File Example:

includeBody

Default: false

This option determines whether the commit message includes body. If you want to include body in message, you can set it to true.

aicommit2 config set includeBody="true"

aicommit2 config set includeBody="false"

maxLength

The maximum character length of the Subject of generated commit message

Default: 50

aicommit2 config set maxLength=100timeout

The timeout for network requests in milliseconds.

Default: 10_000 (10 seconds)

aicommit2 config set timeout=20000 # 20sNote: Each AI provider has its own default timeout value, and if the configured timeout is less than the provider's default, the setting will be ignored.

temperature

The temperature (0.0-2.0) is used to control the randomness of the output

Default: 0.7

aicommit2 config set temperature=0.3maxTokens

The maximum number of tokens that the AI models can generate.

Default: 1024

aicommit2 config set maxTokens=3000topP

Default: 0.9

Nucleus sampling, where the model considers the results of the tokens with top_p probability mass.

aicommit2 config set topP=0.2disabled

Default: false

This option determines whether a specific model is enabled or disabled. If you want to disable a particular model, you can set this option to true.

To disable a model, use the following commands:

aicommit2 config set GEMINI.disabled="true"

aicommit2 config set GROQ.disabled="true"codeReview

Default: false

The codeReview parameter determines whether to include an automated code review in the process.

aicommit2 config set codeReview=trueNOTE: When enabled, aicommit2 will perform a code review before generating commit messages.

⚠️ CAUTION

- The

codeReviewfeature is currently experimental. - This feature performs a code review before generating commit messages.

- Using this feature will significantly increase the overall processing time.

- It may significantly impact performance and cost.

- The code review process consumes a large number of tokens.

codeReviewPromptPath

- Allow users to specify a custom file path for code review

- Note: Paths can be absolute or relative to the configuration file location.

aicommit2 config set codeReviewPromptPath="/path/to/user/prompt.txt"Available General Settings by Model

| timeout | temperature | maxTokens | topP | |

|---|---|---|---|---|

| OpenAI | ✓ | ✓ | ✓ | ✓ |

| Anthropic Claude | ✓ | ✓ | ✓ | ✓ |

| Gemini | ✓ | ✓ | ✓ | |

| Mistral AI | ✓ | ✓ | ✓ | ✓ |

| Codestral | ✓ | ✓ | ✓ | ✓ |

| Cohere | ✓ | ✓ | ✓ | ✓ |

| Groq | ✓ | ✓ | ✓ | ✓ |

| Perplexity | ✓ | ✓ | ✓ | ✓ |

| DeepSeek | ✓ | ✓ | ✓ | ✓ |

| Ollama | ✓ | ✓ | ✓ | |

| OpenAI API-Compatible | ✓ | ✓ | ✓ | ✓ |

All AI support the following options in General Settings.

- systemPrompt, systemPromptPath, codeReview, codeReviewPromptPath, exclude, type, locale, generate, logging, includeBody, maxLength

Configuration Examples

aicommit2 config set \

generate=2 \

topP=0.8 \

maxTokens=1024 \

temperature=0.7 \

OPENAI.key="sk-..." OPENAI.model="gpt-4o" OPENAI.temperature=0.5 \

ANTHROPIC.key="sk-..." ANTHROPIC.model="claude-3-haiku" ANTHROPIC.maxTokens=2000 \

MISTRAL.key="your-key" MISTRAL.model="codestral-latest" \

OLLAMA.model="llama3.2" OLLAMA.numCtx=4096 OLLAMA.watchMode=true🔍 Detailed Support Info: Check each provider's documentation for specific limits and behaviors:

Logging

The application utilizes two distinct logging systems to provide comprehensive insights into its operations:

1. Application Logging (Winston)

This system handles general application logs and exceptions. Its behavior can be configured through the following settings in your config.ini file:

logLevel:- Description: Specifies the minimum level for logs to be recorded. Messages with a level equal to or higher than the configured

logLevelwill be captured. - Default:

info - Supported Levels:

error,warn,info,http,verbose,debug,silly

- Description: Specifies the minimum level for logs to be recorded. Messages with a level equal to or higher than the configured

logFilePath:- Description: Defines the path to the main application log file. This setting supports date patterns (e.g.,

%DATE%) to automatically rotate log files daily. - Default:

logs/aicommit2-%DATE%.log(relative to the application's state directory, typically~/.local/state/aicommit2/logson Linux or~/Library/Application Support/aicommit2/logson macOS).

- Description: Defines the path to the main application log file. This setting supports date patterns (e.g.,

exceptionLogFilePath:- Description: Specifies the path to a dedicated log file for recording exceptions. Similar to

logFilePath, it supports date patterns for daily rotation. - Default:

logs/exceptions-%DATE%.log(relative to the application's state directory, typically~/.local/state/aicommit2/logson Linux or~/Library/Application Support/aicommit2/logson macOS).

- Description: Specifies the path to a dedicated log file for recording exceptions. Similar to

2. AI Request/Response Logging

This system is specifically designed to log the prompts and responses exchanged with AI models for review and commit generation. These logs are stored in the application's dedicated logs directory.

- Log Location: These logs are stored in the same base directory as the application logs, which is determined by the system's state directory (e.g.,

~/.local/state/aicommit2/logson Linux or~/Library/Application Support/aicommit2/logson macOS). - File Naming: Each AI log file is uniquely named using a combination of the date (

YYYY-MM-DD_HH-MM-SS) and a hash of the git diff content, ensuring easy identification and chronological order.

Enable/Disable Logging

The logging setting controls whether log files are generated. It can be configured both globally and for individual AI services:

- Global

loggingsetting: When set in the general configuration, it controls the overall application logging (handled by Winston) and acts as a default for AI request/response logging. - Service-specific

loggingsetting: You can override the globalloggingsetting for a particular AI service. Ifloggingis set tofalsefor a specific service, AI request/response logs will not be generated for that service, regardless of the global setting.

Removing All Logs

You can remove all generated log files (both application and AI logs) using the following command:

aicommit2 log removeAllCustom Prompt Template

aicommit2 supports custom prompt templates through the systemPromptPath option. This feature allows you to define your own prompt structure, giving you more control over the commit message generation process.

Using the systemPromptPath Option

To use a custom prompt template, specify the path to your template file when running the tool:

aicommit2 config set systemPromptPath="/path/to/user/prompt.txt"

aicommit2 config set OPENAI.systemPromptPath="/path/to/another-prompt.txt"For the above command, OpenAI uses the prompt in the another-prompt.txt file, and the rest of the model uses prompt.txt.

NOTE: For the

systemPromptPathoption, set the template path, not the template content

Template Format

Your custom template can include placeholders for various commit options.

Use curly braces {} to denote these placeholders for options. The following placeholders are supported:

- {locale}: The language for the commit message (string)

- {maxLength}: The maximum length for the commit message (number)

- {type}: The type of the commit message (conventional or gitmoji)

- {generate}: The number of commit messages to generate (number)

Example Template

Here's an example of how your custom template might look:

Generate a {type} commit message in {locale}.

The message should not exceed {maxLength} characters.

Please provide {generate} messages.

Remember to follow these guidelines:

1. Use the imperative mood

2. Be concise and clear

3. Explain the 'why' behind the changeAppended Text

Please note that the following text will ALWAYS be appended to the end of your custom prompt:

Lastly, Provide your response as a JSON array containing exactly {generate} object, each with the following keys:

- "subject": The main commit message using the {type} style. It should be a concise summary of the changes.

- "body": An optional detailed explanation of the changes. If not needed, use an empty string.

- "footer": An optional footer for metadata like BREAKING CHANGES. If not needed, use an empty string.

The array must always contain {generate} element, no more and no less.

Example response format:

[

{

"subject": "fix: fix bug in user authentication process",

"body": "- Update login function to handle edge cases\n- Add additional error logging for debugging",

"footer": ""

}

]

Ensure you generate exactly {generate} commit message, even if it requires creating slightly varied versions for similar changes.

The response should be valid JSON that can be parsed without errors.This ensures that the output is consistently formatted as a JSON array, regardless of the custom template used.

Watch Commit Mode

Watch Commit mode allows you to monitor Git commits in real-time and automatically perform AI code reviews using the --watch-commit flag.

aicommit2 --watch-commitThis feature only works within Git repository directories and automatically triggers whenever a commit event occurs. When a new commit is detected, it automatically:

- Analyzes commit changes

- Performs AI code review

- Displays results in real-time

For detailed configuration of the code review feature, please refer to the codeReview section. The settings in that section are shared with this feature.

⚠️ CAUTION

- The Watch Commit feature is currently experimental

- This feature performs AI analysis for each commit, which consumes a significant number of API tokens

- API costs can increase substantially if there are many commits

- It is recommended to carefully monitor your token usage when using this feature

- To use this feature, you must enable watch mode for at least one AI model:

aicommit2 config set [MODEL].watchMode="true"Upgrading

Check the installed version with:

aicommit2 --versionIf it's not the latest version, run:

npm update -g aicommit2Disclaimer and Risks

This project uses functionalities from external APIs but is not officially affiliated with or endorsed by their providers. Users are responsible for complying with API terms, rate limits, and policies.

Contributing

For bug fixes or feature implementations, please check the Contribution Guide.

Contributors ✨

Thanks goes to these wonderful people (emoji key):

@eltociear 📖 |

@ubranch 💻 |

@bhodrolok 💻 |

@ryicoh 💻 |

@noamsto 💻 |

@tdabasinskas 💻 |

@gnpaone 💻 |

@devxpain 💻 |

@delenzhang 💻 |

@kvokka 📖 |

If this project has been helpful, please consider giving it a Star ⭐️!

Maintainer: @tak-bro